In a Healthcare-AI Bias curtain-raiser, a skin-cancer detection algorithm fails to detect skin cancer for individuals with darker skin. Algorithmic Bias was perpetuated when inclusive data was not used, and when only light-skinned individuals became the source for training the algorithm. And this story can be reversed to achieve debiased, explainable, and pertinent predictions.

Once an AI Bias, not always an AI Bias. That’s what the world of AI is making progress to set a trailblazing course. In the meantime, a recruiting algorithm from a Professional Networking errs on the side of word pattern. The algorithm lures a Senior Technical writer with the ‘Interested in this Job?’ message meant for the position of Senior Technical Analyst. A classic case of Algorithmic bias?

Working on a ‘word pattern’ rather than skill sets, the algorithm was built with that word ‘Technical’ Bias. Had there been the Explainability component, the model could have been interpreted where Explainable AI would have shown the way to throw accurate outcomes.

The White Box model is taking over the Blackbox domain of AI model to set the transformation in to a bias-free, explainable, and a transparent model.

What is AI Bias?

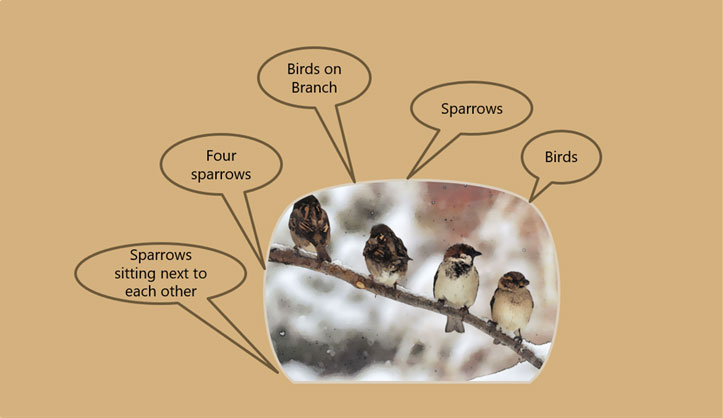

If the image shared below is flashed at you, how would you label it or recognize it?

In this image recognition exercise, prompted to label, descriptions captured in the image are most-likely to be called out by most of us. There are chances that we could miss out on the ‘Brown Sparrow’ description. That could arise because of the ‘regional bias’ for we might not be aware of the existence of blue sparrows. The mind then is trained to see it only as a sparrow.

How Does Bias Creep in?

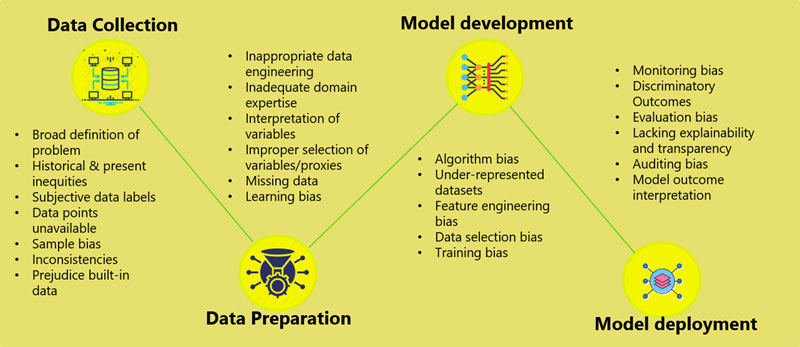

AI Bias is spread across the AI pipeline, from data collection to that of model interpretation as well as deployment. It is imperative to uncover the AI Bias across the pipeline and mitigate bias. There is a growing concern over AI systems being susceptible to biases, manifesting itself via voice recognition and facial recognition to medical decision-making. From historical bias in Data to Algorithmic bias in AI/ML and behavioral & presentation bias happening during human review, bias manifests itself in different forms.

Tracing the Route to Biased Data and Biased Algorithm

If you want to trace the bias route, you must start with data and use case definition. Instead of ‘How to increase product sale?’ in Retail, you can narrow down the scope to ‘How to Increase this Product SKU’s sale?

Broadened scope leaves its impact right from the data collection stage. Pretraining bias can set in with bias in historical data. Establishing pre-training metrics such as Class imbalance and KL divergence can negate the bias as part of the debiasing strategy.

Another case in point is missing data, which breeds data pre-processing biases. Model parameter estimation will be biased if there is missing data. Any use case across industries that takes age and gender as features could induce bias, as in the case of loan approval prediction. Considering the bias in loan approval prediction, is there a way to explain the route of bias and mitigate the bias?

Bias also opens doors via audit bias, as in the case of employment algorithms. From data collection to model outcome interpretation, bias finds its source to pollute the AI model and outcomes.

Explainable AI for Bias Mitigation

Take the case of bank loan disbursal. The loan disbursal algorithm has put emphasis on one feature, age, giving way to Representative bias. If the loan disburser rejects low risk older applicants, they have erred by falling prey to the negative impressions triggered by the universal crowd. Explainable AI helps to deal the situation on an ‘individual’ basis, guiding the disburser to understand the individual’s difference from that of the average using pertinent conditions.

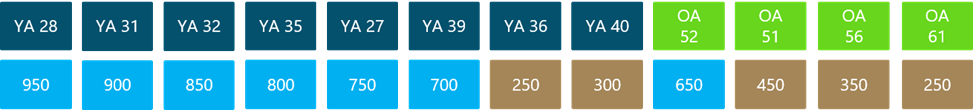

Explainability can be applied to any bias. Let’s reiterate the problem stated above – loan disbursals based on age. But before looking at the predictions thrown by an ML model, let us assume that we adhere to ‘age parity’ as a fairness metric. Now, look at the following predictions given by the loan disbursal prediction model against age and credit score. The YA denotes the younger age between 27 years and 40 years, and OA is associated with the older age greater than 50 years.

The model is not an ambassador of ‘fairness’, since 71.42% of YA applicants have got their loan approved. In contrast, looking at the OA group, only 25% of applicants have got their loan approved. Explainable AI becomes apt to uncover the reasons as to why such predictions were made by the ML model. By leveraging explainability, we can also refigure the ‘threshold’ values to establish age parity in this case.

Checkmate AI Bias with Debiasing & XAI Precision

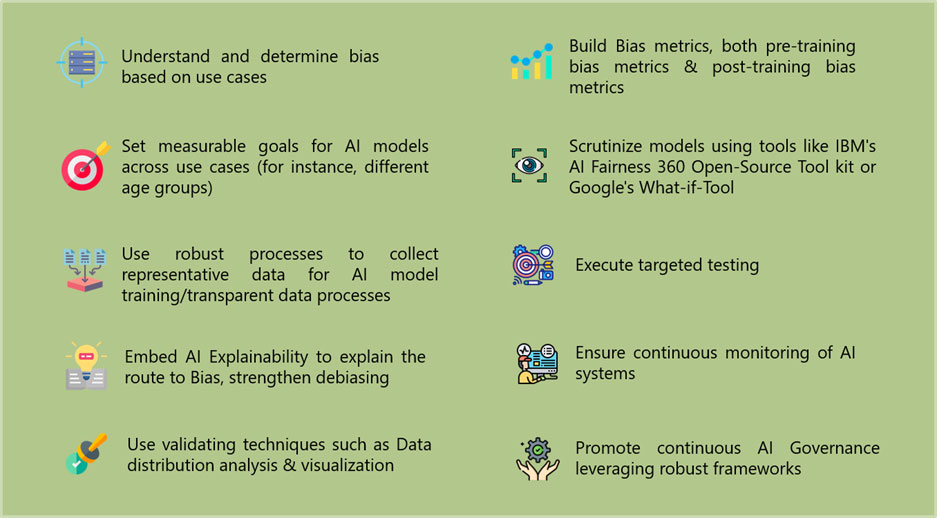

The debiasing strategy must use the combined power of AI Ethics playbook (transparency, fairness, explainability and accountability pillars) and best practices to detect and mitigate AI Bias. Laying stress on the technical strategy, organizations can adhere to practical and best practices captured below to identify and thwart bias in data and algorithms, and embed explainability to do away with the negative impact of AI bias on model and outcome accuracy.

Measure twice, cut once seems to resonate with AI world. Prior to the adverse effects of AI Bias impacting Healthcare, Financial Services, Retail and other industries, twinning AI Bias mitigation and Explainable AI can set the debiasing tone to eliminate bias from AI systems.

All examples quoted in the article, like the loan prediction algorithm imbibing bias owing to features including age and gender, have been included for the sake of better understanding.