Oops! the Ops error occurred when MLOps and AIOps were bracketed together. MLOps and AIOps may invoke a striking similarity, but are entirely different disciplines serving different purposes. For one, MLOps standardizes machine learning model deployment while AIOps automates IT operations.

Let us consider two scenarios that highlight the difference in core principles.

Scenario 1

A financial services company provides analytical tools and data models to guide customers manage financial risk. An API was developed for sharing improved models with customers, enabling instant redeployment of models developed based on feedback which then got embedded into product releases. This is a classic case of MLOps where feedback inputs were used for redeployment of improved models reducing the process time by 85%.

Scenario 2

Another financial services company faced a different problem. There were stiff challenges in detecting cyber threats, recognizing cloud infrastructure failures, and creating proactive alerts for the IT Ops team. From the firefighting mode, IT infra and IT ops teams have moved on to use proactive alerts, intelligent diagnosis, and faster resolution to make effective decisions, keep cyber-attacks at bay, and promote positive customer experience. Thanks to AIOps powered by big data, AI, and ML for automating and transforming IT operations.

In the cited scenarios, the first one resonates with MLOps, and the second scenario highlights the essence of AIOps.

MLOps vs AIOps – A Quick Reference

Here’s a crisp coverage of MLOps vs AIOps.

| MLOps | AIOps |

| Productionizes machine learning models, also recognized as DevOps for ML pipelines; facilitates shared infra for data engineering and data science teams | Automates IT operations leveraging big data & ML including causality determination, anomaly detection, event correlation |

| Embeds continuous learning and monitoring facilitating robust governance and continuous refinement of machine learning models | Enables faster and accurate analysis of root causes and insights on reasons beyond the obvious |

| Mitigates bias and ensures fairness through model validation | Provides proactive alerts & automates problem resolutions, enables preventive maintenance |

| Manages ML lifecycle | Manages vulnerability risks continually |

MLOPs vs AIOPs – Concept & Goal

MLOps is a concept designed to automate the process of development, implementation, and management of ML models. The goal is to create a shared infrastructure for data engineers, data scientists, and the operation teams, apply DevOps for ML pipelines, establish collaboration and deep understanding among stakeholders.

The concept of AIOps champions automation of activities associated with IT operations including outlier detection, anomaly detection, and casualty determination. The goal is to leverage big data, AI, and machine learning to embed intelligent automation and unearth root cause pertaining to incidents as well as offer insightful diagnostic information to accelerate incident resolution. For instance, to know how many resources are to be allocated for application performance, AIOps can tap into data and come up with the optimal resource mix.

MLOPs vs AIOps –Orchestration, Setup Workflow

MLOps eliminates the siloed pipelines. Where different pipelines encompassing pre-processing, model validation, feature engineering make up for a machine learning project, MLOps leads the orchestration of these pipelines facilitating automated orchestration of ML model.

The MLOps setup champions the CI/CD infrastructure, automated model retraining after deployment, explainability, audits, and fairness check as highlighted in the figure below.

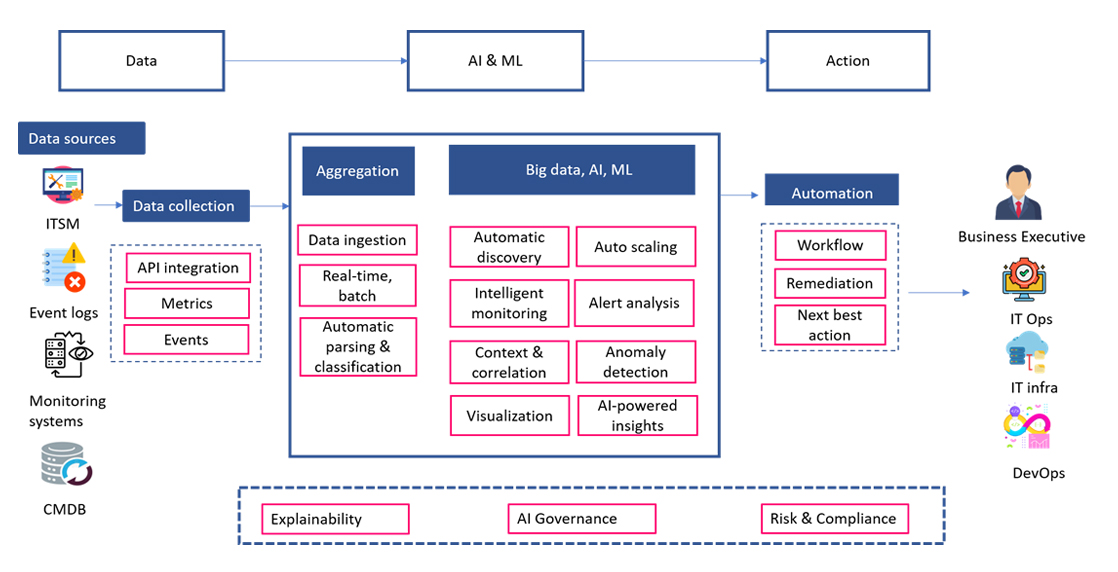

AIOps orchestrates automation of remediation, resolution, workflow, next best action addressing incidents, issues that challenge the IT Ops and IT infra teams. The workflow from Data to Action here starts with the IT ops related data from multiple tools getting aggregated in the AI, ML & Big data layer followed by the use of AI techniques to enable automated remediation and next best action, as illustrated in the figure below.

MLOps vs AIOps – Toolset

One of the asks is the successful implementation of MLOps, and the other is the judicious use of MLOPs toolsets to accomplish this objective. Here are a few toolsets that help adopt and implement MLOps.

- Azure ML is used to orchestrate MLOps for creating reproducible ML pipelines and reusable software environments, deploying models from anywhere, and automating ML lifecycle. Azure ML combines with DevOps to orchestrate CI/CD and lay the retraining pipeline for AI applications.

- Amazon Sagemaker is used to orchestrate MLOps for creating repeatable training workflows. It facilitates central cataloguing of ML artefacts for model reproducibility using CI/CD for integrating ML flows and allowing continuous monitoring of ML models in production.

- MLflow supports MLOps with the seamless management of ML lifecycle across experimentation, deployment as well as testing. MLflow empowers ML engineers with dashboards for tracking ML model performance.

For the searching query of ‘How to harness the potential of AIOps’, the answer points to AIOps tools that help use AI to speed up problem resolution across IT environments. Here are some AIOps toolsets that help organizations take advantage of the power of AIOps.

- IBM Watson AIOps has all flavours of AIOps, from deploying explainability to integrating with monitoring tools and automating tasks, facilitating faster diagnosis and promoting transparent decision making via ChatOps.

- Splunk Enterprise promotes AIOPs across departments including Data intelligence, security, alerting, and threat actor collections to offer real time operational insights. The tool facilitates log monitoring on various OS platforms.

- LogicMonitor is an AIOps platform serving the IT infra and IT ops teams with the wisdom of ‘what is going to happen before it happens’. Augmenting monitoring across server monitoring, cloud monitoring, network monitoring, database monitoring and application monitoring, LogicMonitor champions automation, illuminates patterns, and offers insights in terms of warning bells preceding issues.

MLOps vs AIOps – Key Capabilities

Here are the key capabilities of MLOps that lead way for seamless productionizing of ML models.

- CI/CD & Automation – Imagine there are 8 ML client solutions, and what if the solutions fail at some point in time, and then the crucial query boils down to ‘How many teams to be deployed for maintaining the solutions?

Automation, a key MLOps capability, puts this ordeal to rest. Now there can be hundreds of machine learning solutions functioning parallelly. And the CI/CD factor ensures that the end-to-end pipeline is automated.

- Feature Store – How about taking advantage of features from pre-trained models?

Data processing, in an ML project, consumes about 70% of the time where the protagonists work with features. Feature stores allow features as well as their metadata to be stored in a central repository that can be easily accessed.

- Reproducibility & Versioning – Data and model can be versioned to be reproduced for ML experiments. ML teams can dig into past experiments and decide on the best fit. Through this capability, MLOps eliminates instances of corrupted versions pertaining to past data being used and current experiments getting muddled owing to that.

- ML framework testing & automated model-correction pipeline – Apart from the model, feature and data testing, MLOps ML framework is tested in terms of resource utilization, performance and compliance. Allowing continuous monitoring, MLOps offers automated model-correction pipelines to ensure the ML solution is not lagging and there is no downtime.

- Operational metrics – In being a key capability of the MLOps, operational metrics such as deployment frequency, prediction processing time, and retraining frequency help capture warning signals and thwart red flags before they can impact workflows.

AIOps brings the following traits to empower IT ops teams with speedy issue resolution.

- Noise reduction – Cutting through the clutter to home in on critical alerts is a significant capability that accelerates detection and issue resolutions.

- Teamwork – The key takeaway from AIOps is that it promotes seamless collaboration among several teams, accelerating resolution and reducing downtimes.

- De-siloed information and correlation – MLOps empowers teams to get rid of silos, correlate information across many data sources, and acquire a contextualized vision across network, infrastructure, storage, and applications.

- Automation – Enabling automated workflows for recurring incidents, AIOps helps tap into the value of RCA and knowledge recycling.

MLOps vs AIOps – Teamwork

Teamwork promoted by MLOps is well supported by collaborative development environment that offers access to information, data, tools and code.

Let’s take the codebase for instance. When ML engineers develop the application codebase, collaboration is extended via the ‘submit pull requests’ for the data scientist to gain access to the codebase. While data scientists build their R & D codebase, engineers are empowered to contribute to this codebase.

Keeping ML operational intent at the core, ML and data science teams can work closely with the ops team for aligning business strategies and goals, while the Ops team can track problems while monitoring development process, keep stakeholders informed instantly, reach out to the development team for support simultaneously.

AIOps fosters a collaborative environment for the IT Ops, IT infra SREs and business unit team members and streamlines workflow and collaboration activities between IT teams and business teams. It has automated the collaborative process encompassing diagnostics and remediation.

From concept to orchestration, MLOps is different from AIOps. Simply put, when processes are to be standardized, MLOps steps in. When machines are to be automated, AIOps chips in.